Chapter 17 ANCOVA: Linear models with categorical and continuous explanatory variables

In the previous chapter we looked at linear models where there is a continuous response variable and two categorical explanatory variables (we call this type of linear model two-way ANOVA). In this chapter we will look at linear models where the explanatory variables are both continuous and categorical. You can think of these as a cross between ANOVA and linear regression. These types of models are often called “ANCOVA” or Analysis of Covariance.

In a simple case, you might be interested in a model with a continuous response variable (e.g. height) and a continuous and a categorical explanatory variable (e.g. hand width and gender). The categorical variable may have any number of levels, but the simplest case is two (e.g. gender with male and female levels).

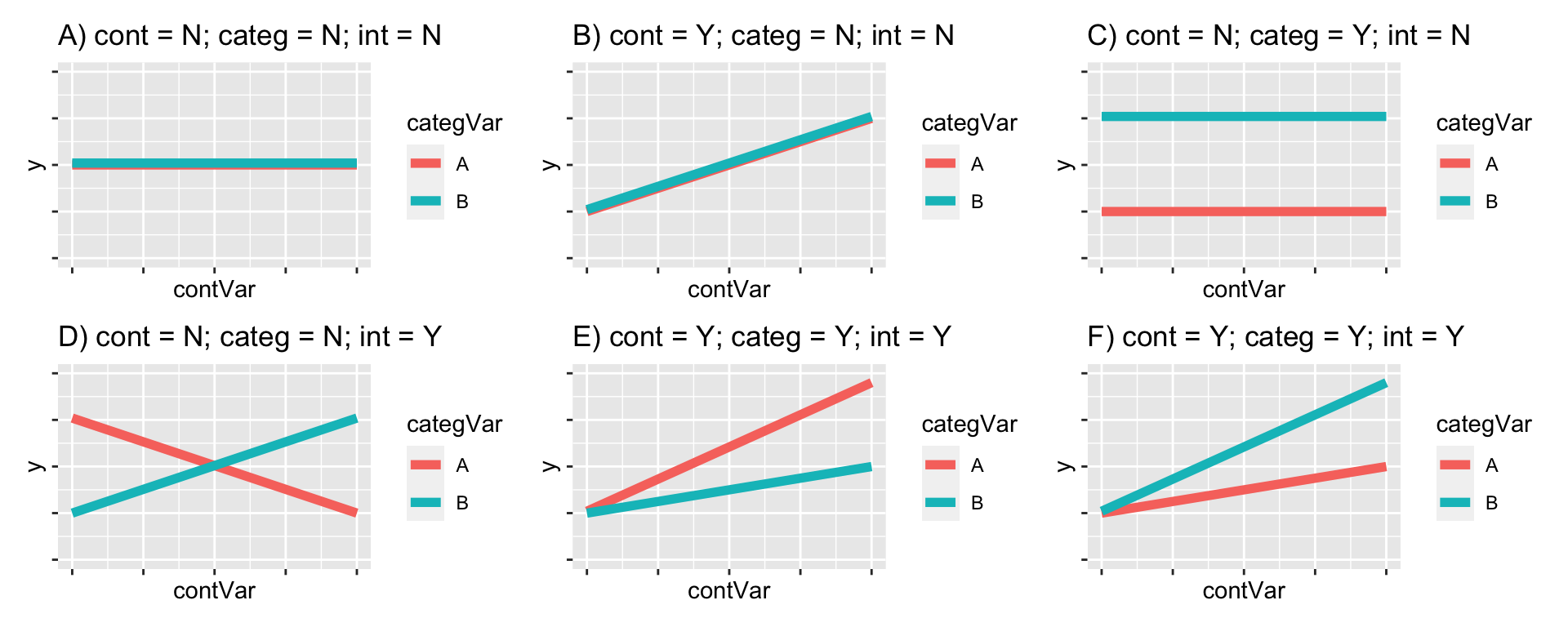

Some of these possible outcomes are illustrated in Figure 17.1. We might see that neither of the two explanatory variables has a significant effect. We might see that one does but not the other. We might see an interaction effect (where the effect of one variable, e.g. hand width, depends on the other, e.g. gender). We might also see an interaction effect but no main effect.

Figure 17.1: Some potential results of the experiment. There may be a significant effect (or not) of both of the main effects (diet and genotype) and there may be a significant interaction effect (or not).

17.1 The height ~ hand width example.

In a previous class (linear regression) you explored the relationship between hand width and height. The aim there was (1) to determine if the relationship (i.e. the slope) was significantly different from 0, and (2) to estimate the equation of the relationship so you could make predictions of height from hand width.

Here we will extend that example by asking whether there are differences between males and females. I am restricting my analysis to 2019 data, but you could do it for any year (or all years, but you might need to first get rid of some outliers using filter).

Remember to load the dplyr, magrittr and

ggplot packages, and to set your working directory

correctly.

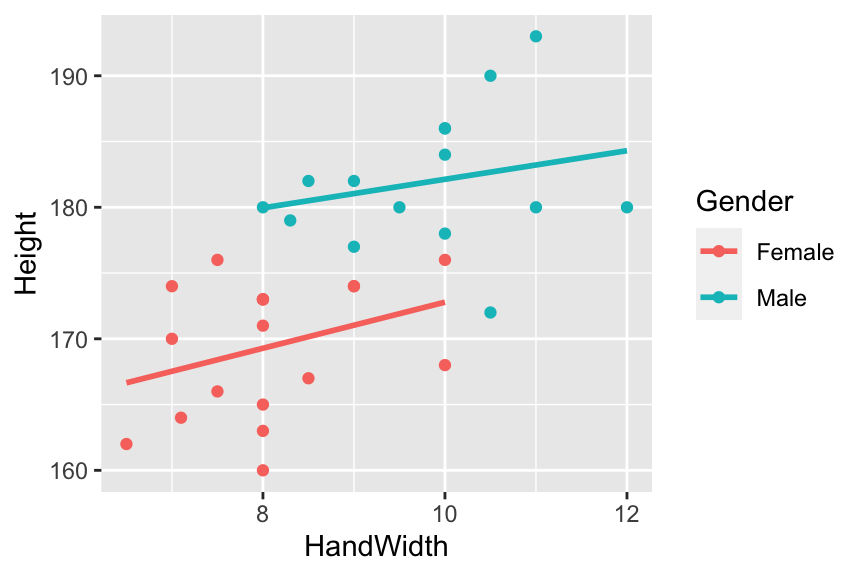

We’ll begin by plotting the data (Figure 17.2).

classData <- read.csv("CourseData/classData.csv") %>%

filter(Year == 2019)

(A <- ggplot(classData, aes(

x = HandWidth, y = Height,

colour = Gender

)) +

geom_point() +

geom_smooth(method = "lm", se = FALSE))

Figure 17.2: ANCOVA on hand width vs. height data in males and females

This shows the results of the ANCOVA model before we have even fit it! You can see that our two continuous variables, Height (the response variable) and HandWidth (one of the explanatory variables) are associated: There is an overall positive relationship between HandWidth and Height You can also see that Gender (the categorical explanatory variable) is important: males tend to be taller than females for any given hand width. For example, a female with hand width of 9cm is ~172cm tall while a male would be about 180cm tall. This shows us that males have a higher intercept than females. There is also a slight difference in the slope of the relationship, with males having a slightly steeper slope than females. We already know that the overall relationship between hand width and height is significant (from the linear regression chapter). These new observations leave us with the following additional questions: (1) are the intercepts for males and females significantly different? (2) are the slopes for males and females significantly different (or would a model with a single slope, but different intercepts be better)?

Now we can fit our model using the lm function. The model formula is Height ~ HandWidth + Gender + HandWidth:Gender. The HandWidth and Gender are the so called main effects while HandWidth:Gender represents the interaction between them (i.e. it is used to address the question “does the effect of hand width differ between the sexes?”). R knows that is fitting an ANCOVA type model rather than a two-way ANOVA because it knows the type of variables that it is dealing with. You can see this if you ask R to tell you what the class of the variables are:

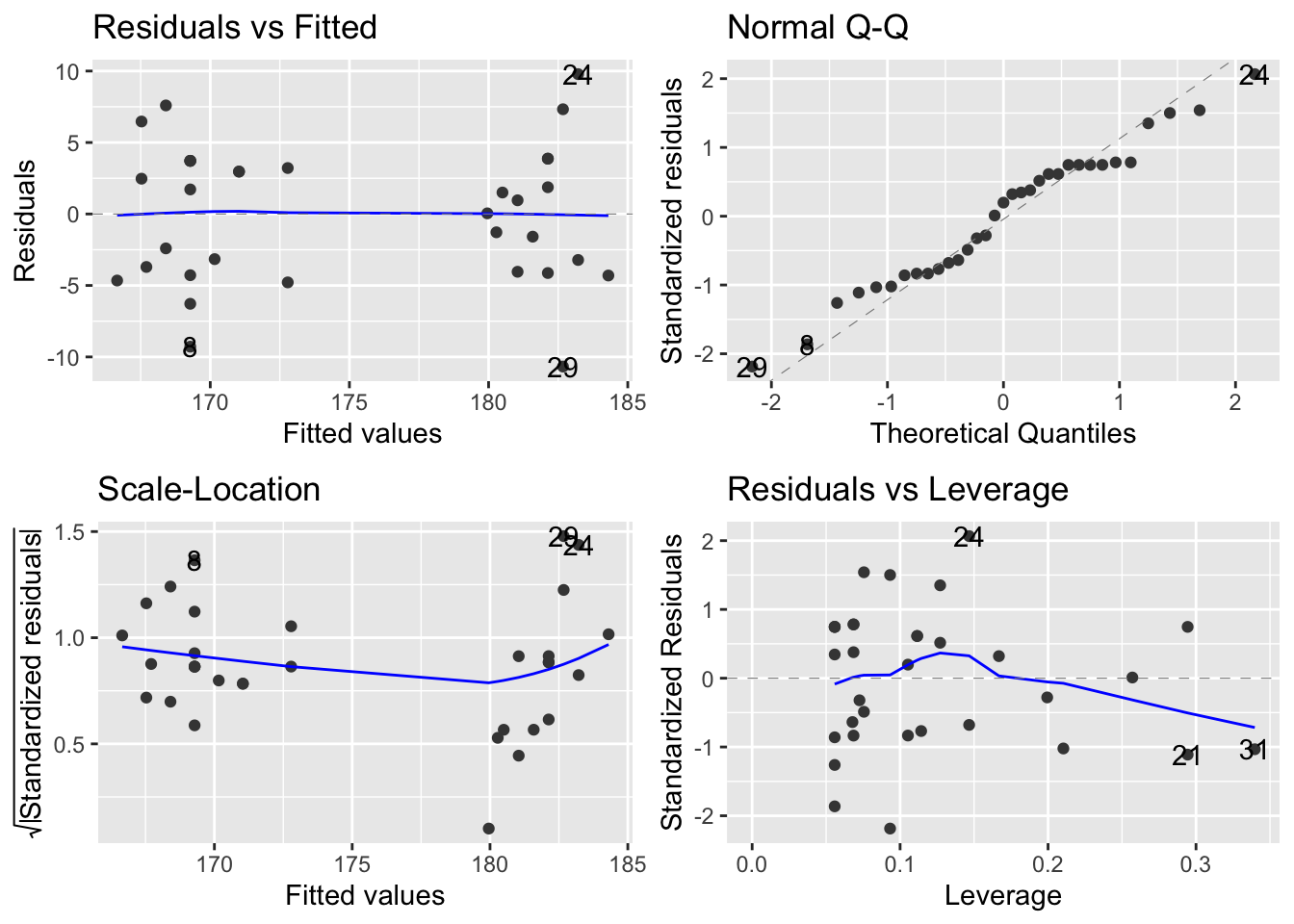

## [1] "character"## [1] "numeric"The first step should, as before, be to check out the diagnostic plots. We should not read to much into these in this case, because we have a small sample size. Nevertheless, lets keep with good habits:

These look good. No evidence of non-normality in the residuals, no heteroscedasticity and no weird outliers.

17.2 Summarising with anova

Now we can get the anova table of our ANCOVA model (yes, I know that sounds strange).

## Analysis of Variance Table

##

## Response: Height

## Df Sum Sq Mean Sq F value Pr(>F)

## HandWidth 1 881.60 881.60 33.5362 2.83e-06 ***

## Gender 1 471.13 471.13 17.9217 0.0002116 ***

## HandWidth:Gender 1 3.65 3.65 0.1388 0.7122211

## Residuals 29 762.35 26.29

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This type of sequential sum of squares Analysis of Variance table should be getting fairly familiar to you now, but let’s unpack what this means. There are four rows in the summary table - one for each of the terms in the model (HandWidth, Gender and HandWidth:Gender), and one for the Residuals (the unexplained variation that remains after fitting the model). The table includes degrees of freedom (Df), sum of squares (Sum Sq), mean sum of squares (Mean Sq) and the associated F and p-values (F value and Pr(>F).

You can interpret the mean sum of squares column in terms of the amount of variation in the response variable (Height) that is explained by the term: The table first tells us the amount of variation (in terms of Mean Sum of Squares) in Height that is captured by a model that includes a common slope for both genders (471.13) . Then it tells us that an additional bit of variation 881.6 is captured if we allow the intercepts to vary with gender. Then it tells us that a small additional amount of variation is explained by allowing the slope to vary between the genders 3.65. Finally, there is a bit of unexplained variation left over (Residuals) 26.29. So you can see that hand width explains most variation, followed by gender, followed by the interaction between them.

You would report from this table something like this:

Hand width and gender both explain a significant amount of the variation in height (ANCOVA - Handwidth: F = 33.536, d.f. = 1 and 29, p<0.001; Gender: 17.922, d.f. = 1 and 29, p<0.001). The interaction effect was not significant, which means that the slopes of the relationship between hand width and height are not significantly different from each other (ANCOVA - F = 17.922, d.f. = 1 and 29, p = 0.712.

It is of course useful to take the interpretation a bit further. You could do this with reference to the plot - e.g. Figure X shows the clear positive relationship between hand width and height and shows that the intercept for females is smaller than that for males. This which means that, for a given hand width, males tend to be taller.

17.3 The summary of coefficients (summary)

To put some quantitative numbers on this description of the pattern we need to get the summary from R.

##

## Call:

## lm(formula = Height ~ HandWidth + Gender + HandWidth:Gender,

## data = classData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -10.6726 -4.0419 0.9581 3.7181 9.7839

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 155.2705 10.4866 14.807 4.69e-15 ***

## HandWidth 1.7514 1.2922 1.355 0.186

## GenderMale 15.9875 16.0430 0.997 0.327

## HandWidth:GenderMale -0.6643 1.7833 -0.373 0.712

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.127 on 29 degrees of freedom

## Multiple R-squared: 0.6402, Adjusted R-squared: 0.603

## F-statistic: 17.2 on 3 and 29 DF, p-value: 1.313e-06This summary table gives the coefficients of the statistical model, their standard errors, and the t-test results of whether the estimate is greater than 0. This is the same as the summary tables given for ANOVA and linear regression.

In the ANOVA summary tables, the estimates were given in relation to the reference level – the (Intercept) and these ANCOVA summary tables are no difference. Interpreting is best done with reference to the graph of the data and fitted model outputs (the graph above).

The reference level (the (Intercept)) is the intercept for the line for the first level of the categorical variable (Females, in this case). Here the model estimates that the intercept for Females is at 155.270 (i.e. if you extended the line out to the left it would eventually cross the y-axis at this point). The next coefficient HandWidth is the slope of this Female line (1.751). Then we have GenderMale: this coefficient is the difference in intercept between the Female and Male lines. This is followed by the intercept for the interaction term HandWidth:GenderMale: this is the difference between slopes for the two genders.

We can therefore do some simple arithmetic to get the equations (i.e. slopes and intercepts) of the lines for both genders. For females this is easy (they are reference level, so you can just read the values directly from the table) - the intercept is 155.270 and the slope is 1.751.

For males the intercept is 155.270 + 15.987 = 171.258. The slope is 1.751 + -0.664 = 1.087.

We could add these equations to our reporting of the results.

Figure X shows the clear positive relationship between hand width and height and shows that the intercept for females is smaller than that for males. This which means that, for a given hand width, males tend to be taller. The model fit for males is Height = ____\(\times\)HandWidth + ____and the fit for females is Height = ____\(\times\)HandWidth + ____

You could check these by using geom_abline to add lines with those equations to the plot (just as a “sanity check”).

A +

geom_abline(intercept = ____, slope = ____) +

geom_abline(intercept = ____, slope = ____)At the bottom of the summary output we are given the \(R^2\) values. Because this model has several terms (i.e. variables) in it we should use the adjusted \(R^2\) values. These have been corrected for the fact that the model has extra explanatory variables. So in this case, we could report that the model explains 60.30% of variation in Height (Adjusted \(R^2\) = 0.60 - not bad!

So, to describe this summary table more generally - the coefficients can be slopes, intercepts, differences between slopes, and differences between intercepts. They are slopes and intercepts for the first level of the categorical variable, and for the subsequent levels they are differences. Piecing these together can be hard to figure out without reference to the plot of the data and model fits - another good reason to plot your data!